Algorithm analyzes mammograms, signals need for more breast cancer screening

New software could facilitate more consistent assessment of cancer risk

Mallinckrodt Institute of Radiology

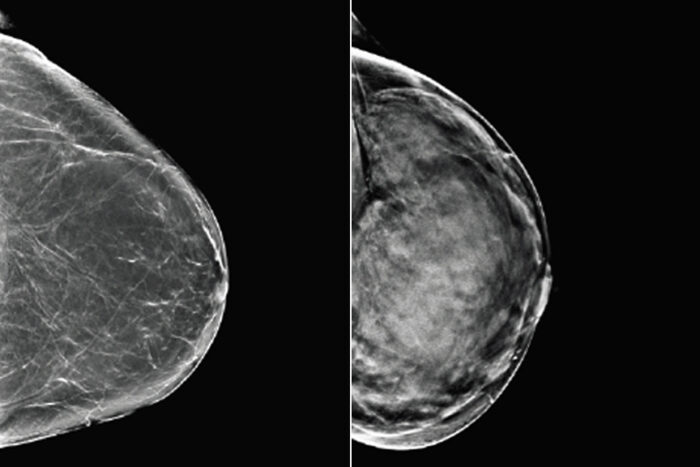

Mallinckrodt Institute of RadiologyBreast tissue can range from almost entirely fatty (left) to dense (right). Since both healthy but dense breast tissue and cancerous tissue appear light on mammograms, women with dense breasts may need additional or alternative screening to detect breast cancer. Researchers at Washington University School of Medicine in St. Louis and Whiterabbit.ai have developed a software that assesses breast density and can help identify women who could benefit from additional screening.

Not only does dense breast tissue increase the chance that a woman will develop breast cancer, the tissue creates white shadows on mammograms, obscuring the images and making it harder for doctors to identify potentially cancerous growths.

Researchers at Washington University School of Medicine in St. Louis and Whiterabbit.ai, a Silicon Valley technology startup partner, have developed software that analyzes mammograms for density to help doctors determine whether a woman has dense breast tissue and could benefit from additional or alternative screening.

The software, reported recently in the journal Radiology: Artificial Intelligence, received market clearance from the Food and Drug Administration on Oct. 30 and is now commercially available.

“We know that dense breast tissue is a risk factor for breast cancer and a challenge for screening,” said senior author Richard L. Wahl, MD, the Elizabeth E. Mallinckrodt Professor and head of the Department of Radiology. “The problem is that radiologists don’t always agree on what qualifies as a dense breast. This algorithm appears to perform quite well in comparison to skilled radiologists. By providing a quantitative assessment of breast density, it could reduce variability and help ensure that women with dense breasts are identified so they can get appropriate screening.”

On a mammogram, fatty tissue appears dark, while denser glandular tissue and cancerous tissue appears light. Radiologists classify breast tissue by density based on the amount and appearance of light areas. Without an objective way to quantify the shade and distribution of shaded areas, radiologists judge density by eye, a subjective technique that inevitably leads to disagreement in how to classify tissue.

To train an algorithm to assess breast density, the researchers started with a set of 750,752 mammograms from 57,492 patients imaged during 187,627 sessions. The mammograms occurred from 2008 to 2017 at the Joanne Knight Breast Health Center in St. Louis, which is affiliated with Siteman Cancer Center at Barnes-Jewish Hospital and the School of Medicine. The mammograms had been classified on a four-point density scale — with the bottom two groups considered not dense and the top two dense — by experienced radiologists at the university’s Mallinckrodt Institute of Radiology. The university’s Computational Imaging Science Laboratory helped organize the enormous data set and build the computational infrastructure to analyze it. The researchers at Whiterabbit.ai helped to train the model and conduct the study.

The researchers used most of the 750,000 images to train and optimize an algorithm to recognize what radiologists considered dense breast tissue. They held back about 10% of the images for use in evaluating the algorithm after it had been trained. Testing showed that the software returned results that substantially agreed with the assessment of the original interpreting radiologists. When making a binary determination — dense or not? — the algorithm was 91% accurate. At 82% accuracy, it also performed well at placing the breast tissue in the correct one of four density groups, seldom returning a result that was off by more than one density category. These results are stronger than results reported in scientific literature for similar software, the researchers said.

The algorithm initially was trained on full-field digital mammography images, a kind of two-dimensional mammogram. 2D mammography is being replaced by digital tomosynthesis, which involves taking X-rays from many angles to create a high-resolution 3D image of the breast that allows for better cancer screening. The 3D image can be converted into so-called synthetic 2D images. The conversion process can be likened to shining a light through a glass sculpture. The shadow of the sculpture and everything inside it can be seen on the wall behind it. More than 70% of facilities today have a digital tomosynthesis scanner.

Ideally, a breast-density software should be able to analyze both traditional 2D mammograms and synthetic 2D tomosynthesis images. To assess how their software performed, the researchers tested the algorithm using a set of 1,080 synthetic 2D images from 270 Barnes-Jewish patients. The algorithm worked nearly as well on synthetic 2D images, returning results that agreed with the radiologists’ assessments 88% of the time. To improve the accuracy, the researchers gave the algorithm some additional training using a set of 500 synthetic 2D images that had been scored by radiologists. With the additional training, the accuracy rose to 91%, but the difference was not statistically significant, indicating that the algorithm was already well-suited to analyzing synthetic 2D images.

Once they had established that the algorithm performed well at judging breast density in the Joanne Knight Breast Health Center patient population, the researchers set about assessing whether it also would work in different populations.

“The problem with AI is that if you train on a specific population, you may end up with an algorithm that works great — but only for that population,” said Wahl, who also is a Siteman Cancer Center research member. “It works in St. Louis, but will it work in Kansas City? Will it work in New York?”

At 59% white, 23% African American, 3% Asian and 1% Hispanic, the population in the training set was diverse, but the limited number of images from Asian and Hispanic people meant there was a risk the algorithm would not be as accurate at identifying dense breasts in patients of those backgrounds. To ensure the algorithm would perform equitably, the researchers collaborated with colleagues at an outpatient radiology clinic in northern California, where the patient population was 58% white, 21% Asian, 7% Hispanic and 1% African American.

They ran the algorithm on a set of 6,192 synthetic 2D images from 744 patients of the California clinic. The software accurately assessed breast density with 92% accuracy. After additional training with 500 images, the algorithm remained about 92% accurate at classifying breasts as dense or not, and its ability to correctly classify breast tissue into four density groups improved.

“Working with our brilliant collaborators at Washington University, we have shown that our algorithm is state-of-the-art for breast density assessment,” said Jason Su, co-founder and chief technology officer of Whiterabbit.ai. “At 82% accuracy, our AI software outperforms the previous best models from academic groups and, furthermore, is better than the agreement between radiologists at 67.4%. With FDA clearance and nationwide commercial availability, it is ready to make a marked difference in helping radiologists to evaluate breast density. By combining density assessment with breast cancer risk models, our continued collaboration with the university will soon help doctors to better take into account the patient’s imaging, personal and family history, giving each woman the personalized recommendation she deserves.”

Along with Wahl, the research team at Washington University included Stephen Moore, an assistant professor of radiology, and Chip Schweiss, a neuroinformatician, as well as Catherine Appleton, MD, now of SSM Health. The researchers at Whiterabbit.ai include lead author Thomas Matthews, PhD, a graduate of Washington University.